News & Press

Saudi Arabia Announces $1.5 Billion Expansion to Fuel AI-powered Economy with AI Tech Leader Groq

Mountain View, California & Riyadh, Saudi Arabia – February 10, 2025 – Silicon Valley AI pioneer Groq has secured a $1.5 billion commitment from the Kingdom of Saudi Arabia (KSA) for expanded delivery of its advanced LPU-based AI inference infrastructure. Announced at LEAP 2025, this major agreement advances the Kingdom’s position as a global leader in AI computing infrastructure while meeting rapidly growing regional demand.

This agreement follows the operational excellence Groq demonstrated in building the region’s largest inference cluster in December 2024. Brought online in just eight days, the rapid installation established a critical AI hub to serve surging compute demand globally.

From its state-of-the-art data center in Dammam, Saudi Arabia, Groq is now delivering market-leading AI inference capabilities to customers worldwide through GroqCloud™. At LEAP 2025, Jonathan Ross, CEO and Founder of Groq, alongside Tareq Almin and Ahmad O. Al-Khowaiter, Chief Technology Officer of Saudi Aramco, demonstrated reasoning LLMs, a KSA-created model Allam, and text to speech models in English and Arabic running live.

The $1.5 billion commitment towards Groq AI infrastructure represents a defining moment for both Groq and the Kingdom to deliver on the Vision 2030 goal of an AI-powered economy in Saudi Arabia.

“It’s an honor for Groq to be supporting the Kingdom’s 2030 vision,” said Jonathan Ross, CEO and Founder of Groq. “We are excited to work alongside Saudi innovators to shape the next chapter of AI.”

Learn more about how Groq is changing the AI industry at groq.com and if you’re interested in access to GroqCloud, learn more here.

About Groq

Groq builds fast AI inference technology. GroqCloud™ delivers exceptional AI compute speed, quality, and energy efficiency for enterprises and developers with its LPU™ AI inference technology. Groq, headquartered in Silicon Valley, provides cloud and on-prem solutions at scale for AI applications. The LPU and related systems are designed, fabricated, and assembled in North America. Build fast with Groq at groq.com.

Source: Saudi Arabia Announces $1.5 Billion Expansion to Fuel AI-powered Economy with AI Tech Leader Groq

Hooks on Tren Finance

What are Hooks?

Hooks are modular, programmable extensions integrated into smart contracts that allow developers to insert and execute custom logic at predetermined points during a transaction’s lifecycle. These predetermined points, often referred to as hook points, act as checkpoints where specific actions can be triggered. This concept is similar to middleware or plugin systems in traditional software, where additional functionalities can be layered onto existing applications without altering their core logic. Hooks enable protocols to become more flexible, customizable, and interoperable, all without requiring significant overhauls of the core codebase.

Plexe: Production-ready custom AI from natural language

Automated Machine Learning (AutoML) systems are an important part of the machine learning engineering toolbox. However, existing solutions often require substantial amounts of compute, and therefore extended running times, to achieve competitive performance.

Cohere launches new AI models to bridge global language divide

Cohere today released two new open-weight models in its Aya project to close the language gap in foundation models.

Aya Expanse 8B and 35B, now available on Hugging Face, expands performance advancements in 23 languages. Cohere said in a blog post the 8B parameter model “makes breakthroughs more accessible to researchers worldwide,” while the 32B parameter model provides state-of-the-art multilingual capabilities.

The Aya project seeks to expand access to foundation models in more global languages than English. Cohere for AI, the company’s research arm, launched the Aya initiative last year. In February, it released the Aya 101 large language model (LLM), a 13-billion-parameter model covering 101 languages. Cohere for AI also released the Aya dataset to help expand access to other languages for model training.

Aya Expanse uses much of the same recipe used to build Aya 101.

“The improvements in Aya Expanse are the result of a sustained focus on expanding how AI serves languages around the world by rethinking the core building blocks of machine learning breakthroughs,” Cohere said. “Our research agenda for the last few years has included a dedicated focus on bridging the language gap, with several breakthroughs that were critical to the current recipe: data arbitrage, preference training for general performance and safety, and finally model merging.”

Aya performs well

Cohere said the two Aya Expanse models consistently outperformed similar-sized AI models from Google, Mistral and Meta.

Aya Expanse 32B did better in benchmark multilingual tests than Gemma 2 27B, Mistral 8x22B and even the much larger Llama 3.1 70B. The smaller 8B also performed better than Gemma 2 9B, Llama 3.1 8B and Ministral 8B.

Cohere developed the Aya models using a data sampling method called data arbitrage as a means to avoid the generation of gibberish that happens when models rely on synthetic data. Many models use synthetic data created from a “teacher” model for training purposes. However, due to the difficulty in finding good teacher models for other languages, especially for low-resource languages.

It also focused on guiding the models toward “global preferences” and accounting for different cultural and linguistic perspectives. Cohere said it figured out a way to improve performance and safety even while guiding the models’ preferences.

“We think of it as the ‘final sparkle’ in training an AI model,” the company said. “However, preference training and safety measures often overfit to harms prevalent in Western-centric datasets. Problematically, these safety protocols frequently fail to extend to multilingual settings. Our work is one of the first that extends preference training to a massively multilingual setting, accounting for different cultural and linguistic perspectives.”

Models in different languages

The Aya initiative focuses on ensuring research around LLMs that perform well in languages other than English.

Many LLMs eventually become available in other languages, especially for widely spoken languages, but there is difficulty in finding data to train models with the different languages. English, after all, tends to be the official language of governments, finance, internet conversations and business, so it’s far easier to find data in English.

It can also be difficult to accurately benchmark the performance of models in different languages because of the quality of translations.

Other developers have released their own language datasets to further research into non-English LLMs. OpenAI, for example, made its Multilingual Massive Multitask Language Understanding Dataset on Hugging Face last month. The dataset aims to help better test LLM performance across 14 languages, including Arabic, German, Swahili and Bengali.

Cohere has been busy these last few weeks. This week, the company added image search capabilities to Embed 3, its enterprise embedding product used in retrieval augmented generation (RAG) systems. It also enhanced fine-tuning for its Command R 08-2024 model this month.

Source: Cohere launches new AI models to bridge global language divide

HIPAA Support [ for Marketing API & Analytics ]

HIPAA is healthcare law that wants to ensure that health specific data, topics, etc [ conditions ] are not transferred to any 3rd party and are not available for remarketing purposes.

Aramco Digital and Groq Announce Progress in Building the World’s Largest Inferencing Data Center in Saudi Arabia Following LEAP MOU Signing

Mountain View, CA & Riyadh, Saudi Arabia – 12 September 2024 – Following the signing of a Memorandum of Understanding (MoU) during LEAP, Aramco Digital, the digital and technology subsidiary of Aramco, and Groq, a leader in AI inference and creator of the Language Processing Unit (LPU), announced their partnership to establish the world’s largest inferencing data center in the Kingdom of Saudi Arabia. This strategic collaboration marks a significant step forward in advancing the Kingdom’s digital transformation initiatives and solidifying its position as a global leader in AI and cloud computing.

Cohere and NRI Collaborate to Launch New Financial AI Platform

Today, Cohere and Japanese consulting firm Nomura Research Institute (NRI) announced a collaboration to launch the NRI Financial AI Platform. The new platform is designed to help global financial institutions increase productivity and streamline their operations with the power of Cohere’s highly secure enterprise-grade AI technology. The platform will officially launch in the first half of 2025.

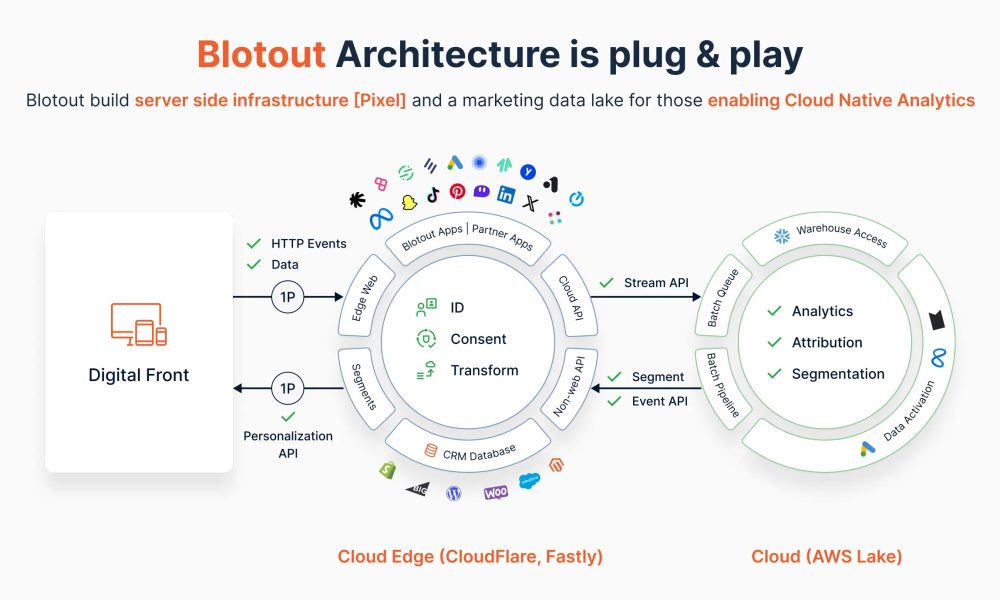

Server Side Pixel Infrastructure

Introducing Blotout Edgetag Infrastructure as a Service

Now that we have been well received on Shopify, and have multiple embedded partners who rely on our Pixel for API delivery, we have decided to expand a common D2C Server Side Platform that enables any [ future or current ] partner to deploy its server side pixel [ plus client side code ] using Blotout infrastructure.

Groq Supercharges Fast AI Inference For Meta Llama 3.1

Mountain View, Calif. – July 23, 2024 – Groq, a leader in fast AI inference, launched Llama 3.1 models powered by its LPU™ AI inference technology. Groq is proud to partner with Meta on this key industry launch, and run the latest Llama 3.1 models, including 405B Instruct, 70B Instruct, and 8B Instruct, at Groq speed. The three models are available on GroqCloud Dev Console, a community of over 300K developers already building on Groq® systems, and on GroqChat for the general public.